Artificial intelligence continues to multiply its feats and give voice. After being used to detect diabetes in a 10-second voice recording, it can now give voice back to those who have lost it.

Devices already exist, often for people who have suffered injuries, laryngeal cancer or even vocal cord operations. But they are often unsightly, painful and expensive. Californian engineers have found a solution so that these patients can make themselves understood without having to force it.

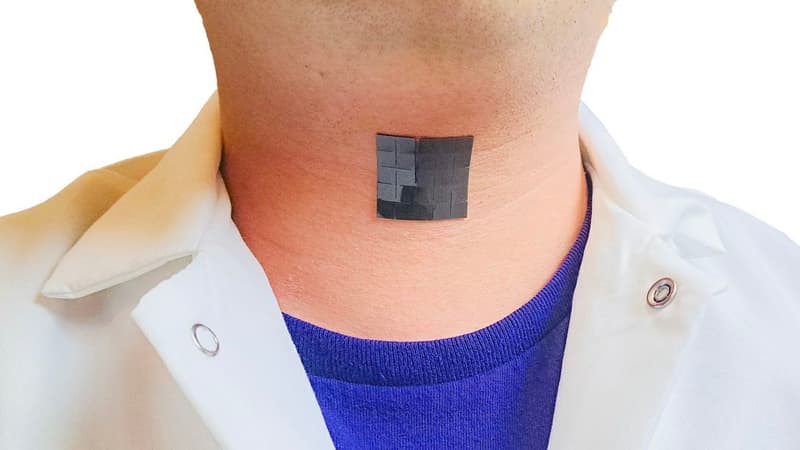

A device the size of an anti-smoking patch

Researchers at the University of California, Los Angeles (UCLA) have announced that they have developed a smart electronic patch. This could make those with temporary or permanent voice problems talk.

This simple biotech patch is applied to the throat. It is a self-powered device measuring 6.5 cm² by 7 g, made up of micromagnets that will create an electromagnetic field when moving. Because the patch will thus detect the movements of the muscles of the larynx and transform attempts to speak, sometimes without sound, into electrical signals.

They will then be translated into audible language by artificial intelligence that will analyze them and convert them into verbal sentences spoken by a small speaker on board.

The test was performed on a small sample of fit adults. They had to say five short sentences. In 95% of cases, the AI interpreted and translated the sentences perfectly. Trials will now begin in patients suffering from various voice disorders. Researchers expect similar results.

AI and voice, a relationship in the making

If AI is increasingly used in medicine in general, in particular for cancer detection, it is also due to the participation of technological giants that have designed large adapted language models, such as Google with its Med-PaLM 2. These They have been built and fed with a lot of medical data to be able to analyze it and make accurate diagnoses.

This is how AI is able to perceive information in the voice that the human ear would not be able to hear and analyze signs of brain or neuronal activity, even if they are minimal.

Almost two years ago, Meta AI had already announced the creation of an AI capable of decoding speech from recordings of brain and neural activity. But the tool was intended to decode vocal segments “thought” by patients and “listen” to what they perceived, rather than giving them voice.

Source: BFM TV