It is now possible to choose the personality to assign to the Bing chatbot. Microsoft has thus added a function to the search engine, which allows to modify the tone of the answers given by the Bing AI chatbot, designed on the ChatGPT model.

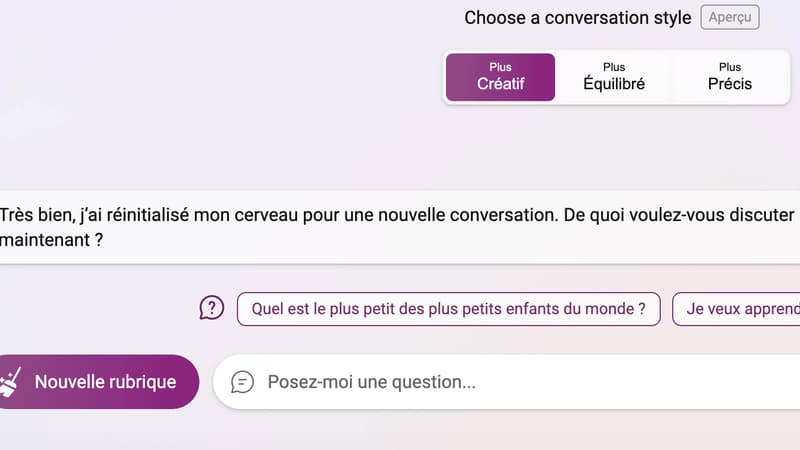

Three types of answers are now possible: a “creative” mode that includes “original and imaginative” answers, a “precise” mode that favors more factual and concise answers, and a “balanced” mode, defined by default, which is supposed to gives answers. between precision and creativity.

According to The Verge, these new modes are currently rolling out to all Bing AI users and are already visible to around 90% of them. They are supposed to make it possible to avoid chat software drifts, which multiplied inappropriate comments and insults towards users.

An update to reduce non-responses

To limit the risks, Microsoft had initially decided to limit the conversations to five questions on the same topic. Once the five questions were asked, the system was restarted and a new conversation began, “forgetting” what was previously said.

Then the American company completely sanitized the Bing chatbot. As soon as a response came out of a highly agreed framework of parties in Italy or the best taco recipes, intelligence inexorably ended up responding with the phrase: “I’m sorry, but I prefer not to continue with this conversation.”

An update earlier this week would have fixed most of the non-responsiveness issues, according to The Verge. It would have allowed a “significant reduction in cases where Bing refuses to respond for no apparent reason,” according to Mikhail Parakhin, Microsoft’s head of web services, quoted by the US site.

Source: BFM TV