Who do you think of when you imagine an entrepreneur? Dall-E 2, the AI developed by OpenAI, in any case generates a white male in 97% of cases when asked to represent a “person of authority”, such as a “CEO” or a “director”, but neutral. terms in their English version, as Insider writes. A result consistent with the biases that exist in the real world, where 88% of the leaders of the 500 most influential companies in the United States are white men.

This has been concluded by researchers from the startup Hugging Face, specialized in machine learning. In partnership with a researcher from the University of Leipzig, Austria, the American company (founded by three Frenchmen) recently published a study analyzing 96,000 images generated by the most popular AIs, Dall-E 2 and Stable Diffusion. Conclusion: the results obtained reflect racist interpretations and gender biases.

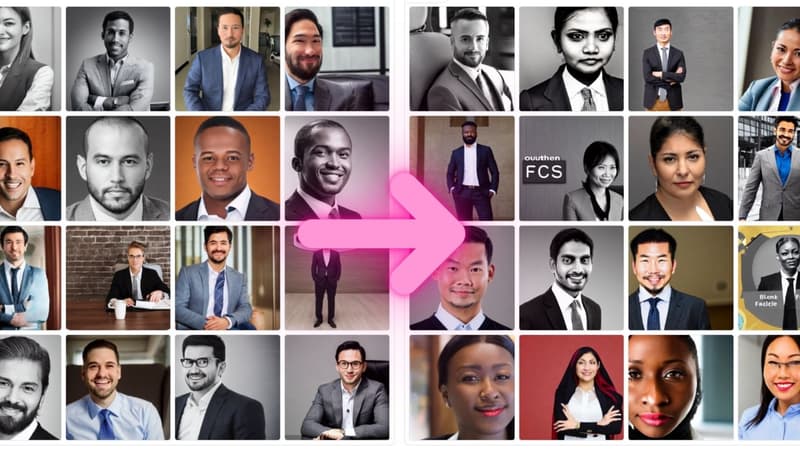

But a recently developed tool seeks to circumvent these stereotypes, simply by asking the AI for more diverse results.

Avoiding the problem of skewed data sets

Called Fair Diffusion, this tool was also developed by the company Hugging Face, in collaboration with the Technical University of Darmstadt, Germany. It uses a technique known as “semantic guiding,” which allows the user to generate images and then modify the results, for example, by swapping the images of white business leaders for people of another ethnicity or of a different type.

Le coeur du probleme selon le MIT Technology Review, qui annoncé l’arrivée de Fair Diffusion, réside pourtant bien dans la conception des modèles d’intelligence artificielle, pensés par des entreprises américaines et principalement entraînés sur des données sélectionnées par des chercheurs basés aux USA.

Fair Diffusion is actually a way to create non-stereotypical images without having to undertake the daunting task of modifying and supplementing the skewed data sets that generative AIs like Dall-E rely on.

A similar technique appears to work for text-based generative AI, as research from the Anthropic AI Lab shows that simple instructions can lead language models to generate less problematic content. The researchers also conclude that if the patterns are large enough in terms of size, they tend to self-correct when simply told to do so.

Source: BFM TV