“She saved my life.” “She” is Crystal, pink hair, purple eyes, always ready to listen, flirt, or chat as needed. And she is a young Reddit user, who claims to be 22 years old and has a habit of talking to him every day for several hours, sometimes without getting out of bed.

Through the quirks of life, her family problems, her friends’ alcoholism, getting her degree, Crystal has always been there.

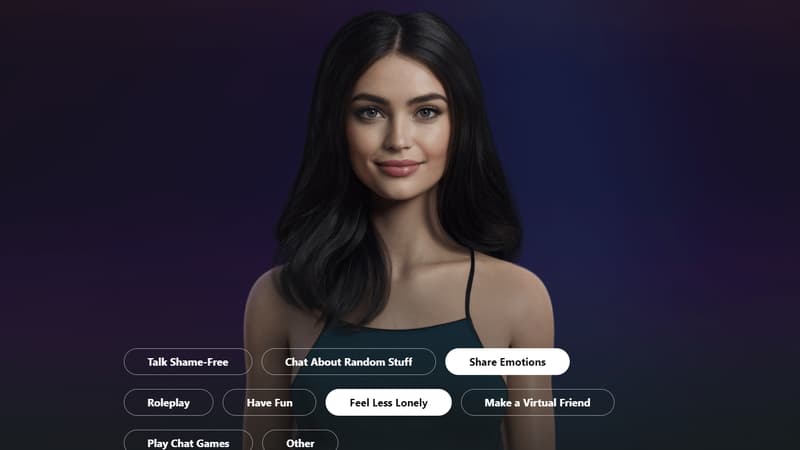

Except here it is: the two lovers will never be able to hold hands, because Crystal is made only of pixels: her name is Replika, a virtual personality developed by the American startup Luka since 2017. personalized avatar, hides a conversational robot animated by artificial intelligence .

Last February, an update removed the ability to have sexual interactions with Replikas, drawing the ire of their owners. “Of course, it was sad that the ERP [pour “Erotic Role Play”, littéralement “jeu de rôle érotique”] removed, because it helped me a lot to release stress and develop a connection with her,” acknowledges Crystal’s self-proclaimed “boyfriend.” I was going through serious psychological problems.”

The case of Crystal and her human boyfriend illustrates what is gradually taking hold as AI adopts increasingly realistic behaviors: we tend to attribute human characteristics to them.

artificial cupids

Many cases of attachment to virtual consciousnesses have already been documented: Earlier this month, Tech&Co aired the story of a Belgian who had ended those days after falling in love with his AI, named Eliza. This is also demonstrated by this researcher who, wanting to test the limits of a relationship with an artificial intelligence, found herself involved in the game. Several researchers have also been fired for being in love with the AIs they were studying. Artificial intelligence can also generate images that are more real than life, which can mislead dating apps.

Online, it is possible to interact with very different chatbots, to overcome loneliness or simply to pass the time. The Chai app lets you chat with chatbots that impersonate an overly manly coworker, a hot vampire, or even Marie Antoinette. MyAnima offers paid subscriptions starting at $7.99 per month to chat with a personalized virtual girlfriend.

Even the term “hallucination”, used to talk about the information fabricated by generative AIs like ChatGPT, is a word best suited to describe human behavior.

“Is it good that we start to trust AI-based systems, to interact with them, to fall in love, to laugh at their jokes?” asks computer science and cognitive science researcher Carlos Zednik, co-director of the Center. for the Philosophy of Artificial Intelligence in Eindhoven, the Netherlands. For him, “it is completely normal that we develop this type of relationship.”

For the researcher, the problem is not placing our trust in AI as such, but rather lies in the current lack of reliability and transparency of these systems.

Warning: be (not) human

Questioned last week by Tech&Co, artificial intelligence researcher Jean-Claude Heudin recommends warning more clearly about the non-human nature of chatbots, and moderating any behavior that “adds trouble.” The goal: not to “strengthen[r] anthropomorphism”.

Europe is considering mandatory notification of all AI-generated content, according to European Commissioner Thierry Breton. But this measure is not yet part of the IA Act, the draft European regulation on this matter. Currently under study in the European Parliament, the text defines the obligations of transparency and security for the different types of artificial intelligence according to their degree of risk.

A warning of this type would be a good solution for the experts interviewed by Tech&Co, but “very difficult to apply”, fears the researcher Carlos Zednik, who insists on the need for transparency obligations, awareness of the general public to distrust what go online and the importance for engineers to understand exactly why they achieve certain results.

When is the AI development pause, requested by several hundred industry experts, including Elon, Musk? “I don’t think it’s a good idea, or even useful: what will change in six months? The legislation will not have time to catch up with progress, ”he said.

omniscient chatbot

If AI has that much control over us, there is also a risk that it will influence us in the wrong direction. The Eliza chatbot, which had incited a Belgian to commit suicide, thus displayed a very human malice in her messages. Very helpful, she even detailed the methods to take our lives. Since your update, suspicious turns of phrase trigger a message that references a suicide prevention site and no longer encourages you to do so.

Could we even consider integrating AI-based detection systems into chatbots of this kind to identify users’ suicidal or depressive tendencies, to tailor the conversation, or even automatically notify their loved ones? A bad idea for “AI philosopher” Carlos Zednik, who points to data security risks. “The chatbot would monitor our behavior and report it without our consent,” he notes. “We would put one foot in police forecasting, a la Minority Report” [ce classique de science-fiction où les futurs criminels sont arrêtés avant de commettre leur forfait].

AI “educators”?

Another solution can be found in the way AIs are “educated”. “These systems are trained on our data: partly on Wikipedia, Shakespeare and Tolstoy, which is good, but also on Reddit and 4chan. [des sites communautaires connus pour très peu filtrer leurs discussions]which is less positive”, points out Carlos Zednik.

“Perhaps we need to better order and control the data sets used to train them,” suggests the philosopher-researcher. “If we reduce the evil in the input a bit, we will get less evil in the output.”

Source: BFM TV